This blog is a Japanese version of what was written by David DeLuca (Senior Storage Solution Architect) on August 24, 2021. Click here for the original text.

There are many factors to consider when migrating data from on-premises to the cloud, including speed, efficiency, network bandwidth, and cost. A common challenge faced by many companies is choosing the right utility to copy large amounts of data from on-premises to an Amazon S3 bucket.

I often see customers start by getting data into S3 using a free data transfer utility or his AWS Snow Family device. The same customer may also use his AWS DataSync to capture continuous incremental changes.

There are some questions to consider in this type of scenario where he first copies the data to S3 using one tool and applies incremental updates using DataSync. How does DataSync work when copying data to an S3 bucket that contains files written by another data transfer utility? Does DataSync recognize that existing files match on-premises files? Will he make a second copy of the data on S3, or will the data need to be resubmitted? And so on.

To avoid additional time, cost, and bandwidth consumption, it is important to understand exactly how DataSync identifies "changed" data. DataSync uses object metadata to identify incremental changes. This metadata does not exist if the data was transferred using a utility other than DataSync. In this case, DataSync needs to do some additional work to properly transfer the incremental changes to S3. Unexpected costs can occur depending on which storage class was used for the initial transfer.

In this article, we've considered three different scenarios for copying on-premises data to an S3 bucket, each with its own unique results:

- Use DataSync to make the first copy and all incremental changes.

- Use DataSync to synchronize storage class data written by utilities other than DataSync, such as: S3 Standard, S3 Intelligent-Tiering (Frequent Access or Infrequent Access tier), S3 Standard-IA, or S3 One Zone-IA.

- Use DataSync to synchronize storage class data written by utilities other than DataSync, such as: S3 Glacier, S3 Glacier Deep Archive, or S3 Intelligent-Tiering (Archive Access or Deep Archive Access tier)

After reviewing the detailed results for each scenario, you can decide how to use DataSync to efficiently migrate and sync your data to Amazon S3 without incurring unexpected charges.

Solution overview

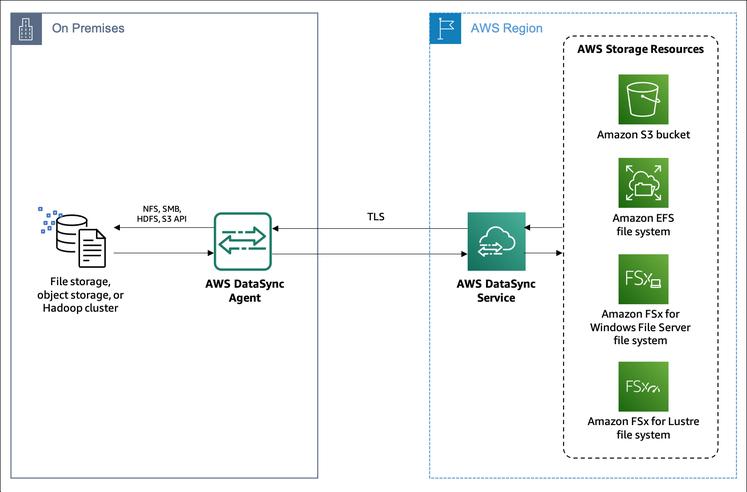

DataSync is an online data transfer service that simplifies, automates, and accelerates data movement between AWS storage services, as well as data movement between on-premises storage systems and AWS storage services. DataSync can be used to migrate active datasets to AWS, archive data to free up on-premises storage capacity, replicate data to AWS for business continuity, and transfer data to the cloud for analysis and processing. available.

Now let's see how DataSync can be used to transfer data from on-premises to AWS storage services. The DataSync agent is a virtual machine (VM) used to read and write data from on-premises storage systems. The agent communicates with his DataSync service in the AWS cloud to actually read and write data to and from the AWS storage service.

The DataSync task consists of a pair of locations where data is transferred. When you create a task, you define both the source and destination locations. See the user guide for more information on setting up DataSync.

The following figure shows the architecture of my test environment. A source location is a set of Network File System (NFS) shares hosted on an on-premises Linux server. The target location is a versioning-enabled S3 bucket. Three DataSync tasks are configured, one for each scenario, using the following task options:

To get a deeper understanding of the internal structure of DataSync, I use three tools:

A brief description of Amazon S3

This blog focuses on transferring data to your S3 bucket, so check out the relevant topics. Amazon S3 is an object storage service that offers industry-leading scalability, data availability, security, and performance.

S3 storage class

S3 offers storage classes for different access patterns.

DataSync allows you to write directly to any S3 storage class.

S3 metadata

When you copy file data to Amazon S3, DataSync automatically copies each file as a single S3 object in a one-to-one relationship and saves the source file's POSIX metadata as Amazon S3 object metadata. .. If the DataSync task is set to “transfer only data that has changed”, DataSync compares the metadata of the file with the S3 metadata of the corresponding object to determine if the file needs to be transferred. .. The following figure is an example of the metadata for his S3 object written by DataSync.

S3 API operations

S3 supports the REST API standard. This allows you to programmatically access S3 buckets and objects. This blog focuses on the following actions:

S3 versioning

Amazon S3 versioning is a way to keep multiple generations of an object in the same bucket. You can use the S3 versioning feature to save, retrieve, and restore all versions of all objects stored in your bucket. The impact of versioning should be carefully considered when transferring data to an S3 bucket using DataSync.

Walkthrough scenario

Scenario 1: Use DataSync to perform an initial synchronization of data to an S3 bucket.

This scenario demonstrates transferring files from an on-premises NFS share to an empty S3 bucket. This scenario sets a baseline for understanding his DataSync behavior related to scenarios 2 and 3. DataSync is used for the first sync, so the S3 storage class has no effect on operation.

- An on-premises NFS share contains two files. “TestFile1” and “TestFile2”. The S3 bucket is empty. Run the DataSync task to transfer the NFS file to S3. When this task is complete, you should see something like this: ::

- Add two files to the NFS share ("TestFile3" and "TestFile4"). Run the second DataSync task. When the task is complete, it should look like this: ::

- Run the third DataSync task without adding new files to the NFS share. When the task is complete, it should look like this: ::

Scenario 2: Use DataSync to synchronize data with existing S3 data whose storage class is Standard, S3 Intelligent-Tiering (Frequent or Infrequent Access tier), S3 Standard-IA, or S3 One Zone-IA.

Unlike the first scenario, the S3 bucket is not empty. The S3 bucket contains two objects that have the same data as the two files on your on-premises file share. However, this file was uploaded to S3 using a utility other than his DataSync, so there is no POSIX metadata.

- An on-premises NFS share contains two files. “TestFile1” and “TestFile2”. A copy of the same data is stored in the S3 bucket with the storage class "Standard".

- Add two files to the NFS share ("TestFile3" and "TestFile4"). Run the first DataSync task. When complete, it should look like this: ::

- Run the second DataSync task without adding new files to the NFS share. When the task is complete, it should look like this: ::

Scenario 3: Use DataSync to synchronize data with existing S3 data whose storage class is Amazon S3 Glacier, S3 Glacier Deep Archive, or S3 Intelligent-Tiering (Archive or Deep Archive tier).

This is similar to scenario 2, but the S3 bucket is not empty. The S3 bucket contains two objects that have the same data as the two files on your on-premises file share. This file has no POSIX metadata because it was uploaded to S3 using a utility other than DataSync. However, unlike the previous scenario, this object resides in a storage class intended for long-term archiving.

- An on-premises NFS share contains two files. “TestFile1” and “TestFile2”. The S3 bucket contains a copy of the same data in the Amazon S3 Glacier storage class.

- Add two files to the NFS share ("TestFile3" and "TestFile4"). Run the first DataSync task. When the task is complete, it should look like this: ::

- Run the second DataSync task without adding new files to the NFS share. When the task is complete, it should look like this: ::

summary

In this blog, I've taken a deep dive into how AWS DataSync works when copying data to an empty S3 bucket compared to an S3 bucket with existing data. The following are the findings obtained. ::

In scenario 1, DataSync performed the first copy from on-premises to an empty S3 bucket. DataSync was able to write the file and the required metadata in one step. This is a very efficient process that can effectively utilize network bandwidth, save time, and reduce overall costs. Most importantly, it has the advantage of working with any Amazon S3 storage class.

Scenario 2 is when the S3 storage class is Standard, S3 Intelligent-Tiering (Frequent or Infrequent Access tier), S3 Standard-IA, or S3 One Zone-IA, and data synchronization with previously transferred S3 data. The we. I didn't have to retransmit the data by wire, but a new copy of each S3 object was created. This method also improves bandwidth efficiency, but incurs additional S3 request charges for GET and COPY operations.

Scenario 3 synchronizes with previously transferred S3 data when the S3 storage class is one of Amazon S3 Glacier, S3 Glacier Deep Archive, or S3 Intelligent-Tiering (Archive or Deep Archive tier). This method required each file to be retransmitted by wire. This is the least efficient method as it leads to additional charges for S3 requests, increased bandwidth usage, and increased time.

Regardless of your S3 storage class, if your bucket has S3 versioning enabled, synchronizing with previously transferred S3 data creates duplicate copies of each object. This results in the additional cost of S3 storage, as shown in both scenarios 2 and 3.

If you're using DataSync for continuous data synchronization, we recommend that you use DataSync for the first transfer to S3 whenever possible. However, if you copied the data to S3 by any means other than DataSync, make sure your storage class is not Amazon S3 Glacier, S3 Glacier Deep Archive, or S3 Intelligent-Tiering (Archive or Deep Archive tier). We also recommend that you stop S3 Versioning for that bucket if it's currently enabled.

Thank you for reading about AWS DataSync and Amazon S3.

![Advantages of "Gravio" that can implement face / person recognition AI with no code [Archive distribution now]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_6/2022/2/25/98ceaf1a66144152b81298720929e8e7.jpeg)