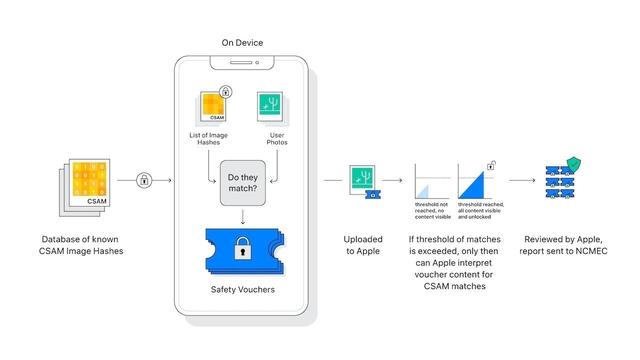

Apple has announced a policy of scanning images uploaded to iCloud to take measures against child abuse (so -called child pornography), and has been criticizing in various ways.

Under such circumstances, it is reported that Apple has acknowledged that it has been scanning the attachment of ICLOUD emails since 2019 to detect CSAM (content that draws sexually explicit activities involving children).On the other hand, iCloud images and iCloud backups are not scanned.

This statement has been discovered by Apple's in -house email stating that it is the largest hotbed for distributing child pornography photos, by Apple's responsible person, Eric Friedman (submitted in a lawsuit with Epic Games.In response to the excavation from the evidence, it was answered that 9TO5MAC in the United States has contacted.In other words, if you didn't scan your iCloud photo, how did you find it a hotbed?That's why.

According to the 9to5mac, there were many other clues that Apple scanning iCloud mail.For example, on the "Child Safety" page (web archive), "Apple supports the discovery and report of child exploitation using image collation technology. Like an email spam filter, Apple's system is electronic signature.Use it to find out what is suspected of being exploited. "

In addition, Apple's highest privacy official Jane Horvath reported in January 2020 that "Apple is looking for illegal images using screening (searching for a group)."It was said that there was.

Apple responded to Friedman's remarks that he had never scanned iCloud images.However, since 2019, it has been confirmed that the transmission and reception of iCloud mail has been scanned to find a CSAM image.Since iCloud emails are not encrypted, it seems easy to scan the attached file when passing the Apple server.

In addition, Apple suggested that he was scanning other data limited, but did not tell me specific things except to stay on a very small scale.However, "other data" does not include backup of iCloud.

9TO5MAC does not blame Apple's answer.Conversely, it pointed out that FRiedman's remarks "Apple are a hotbed of child pornography" are not based on certain data, but may be just inference.Apple has only hundreds of reports about CSAM every year, and email scanning means that it does not capture large -scale evidence of large -scale problems.

Mr. FRIEDMAN's remarks is "Facebook and others are paying attention to trust and safety (fake accounts). They are the worst in privacy. Our priority is the opposite. We are children (we are children (Porn) That's why it's the biggest hotbed to distribute photos. "

In other words, Facebook and other cloud services crack down on child pornography photos until privacy protection shelves, but Apple is not enough to control privacy.Isn't it?

Certainly, Google Drive Terms of Use states, "Google can judge content to determine whether content is illegal or violate Google's program policy."It is stated that scanning is possible (although it is not assumed that Google is screened by Google).

It is not unusual for other companies to scan at least for children's pornography crackdowns.After all, Google's Gmail found illegal images and reported to the authorities.

Apple has gained more attention and criticism by other high -tech companies that have been doing a major policy that "child pornography crackdown is given priority over privacy protection".maybe.Nevertheless, Apple always claims that privacy is one of the basic human rights, so it is natural that it is required to be more strict than other companies.

Source: 9to5mac

![Advantages of "Gravio" that can implement face / person recognition AI with no code [Archive distribution now]](https://website-google-hk.oss-cn-hongkong.aliyuncs.com/drawing/article_results_6/2022/2/25/98ceaf1a66144152b81298720929e8e7.jpeg)